We can’t send you updates from Justia Onward without your email.

Unsubscribe at any time.

In a previous post, we discussed what web crawlers are and what they do for your website. In this article, we’re taking a look at Google’s crawler, Googlebot. Keep reading to discover more about the software in charge of discovering pages across the web.

In our previous post on the Basics of Crawling, Indexing, and Ranking, we introduced the concept of web crawlers and the work these bots do in collecting information from websites as part of the process by which data is indexed for the search engine results page (SERP).

Today, Google is the world’s most dominant search engine and the most famous web crawler is Googlebot. For your website to appear in Google’s search results, it first must be discovered. Googlebot is in charge of this task. Thus, it is useful to have at least a basic understanding of how Googlebot works.

Googlebot: How Does It Work?

There are multiple ways for Googlebot to discover websites, including:

- Locating links on millions of different web pages across the internet and following those links to find new content on the internet; and

- Sitemaps submitted through Google Search Console.

Generally speaking, Googlebot behaves like a web browser. It visits your website to find internal and external links, and it fetches the content in order to build an index of your entire website.

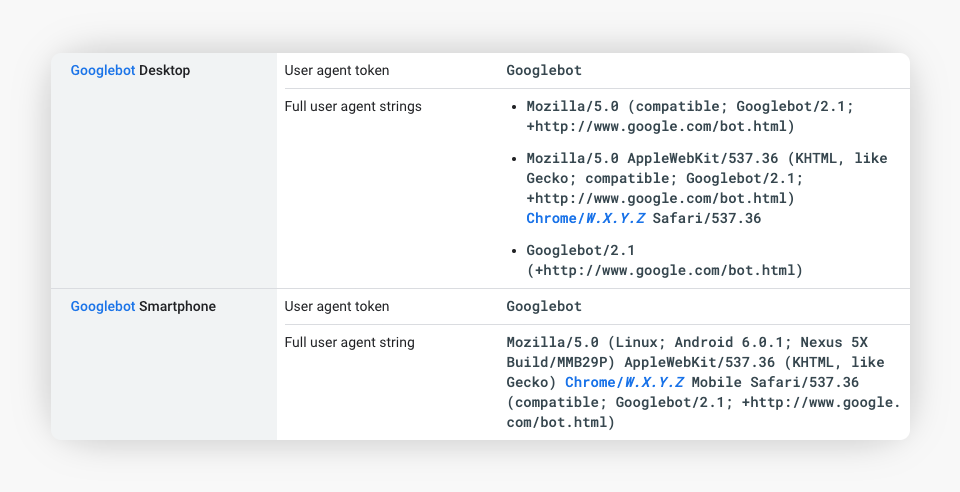

Googlebot uses two different types of crawlers: a Desktop crawler and a Mobile crawler (using a mobile viewport). Each of these crawlers simulates a user on the respective device. It is worth mentioning that both use the same User-Agent token (Googlebot), but you can differentiate between which Googlebot visited your site by looking at the entire user agent string.

Googlebot does more than just fetching and indexing content. It also logs metadata that later serves as one of many ranking factors. Examples of some of the metadata collected by Googlebot include:

- The page HTTP response status code,

- robots meta value,

- viewport size, and

- response time.

The Googlebot Journey

Let’s take a look at what happens when someone submits a sitemap through Google Search Console to inform Google of the links on the site.

- Googlebot fetches a URL from the crawling queue. In this example, that is the sitemap.

- It checks whether the URL allows crawling by reading the robots.txt file (more on that below). Depending on the instructions contained in the robots.txt, Googlebot evaluates whether it should continue the crawling process or skip the URL.

- If it is not disallowed, i.e. the instructions tell Googlebot to continue crawling, it searches for all available href links in the HTML and adds new URLs to the crawling queue.

- Then, Googlebot proceeds to parse the HTML. Using Structured Data is greatly helpful in this stage of the process, as it simplifies the task of understanding your web page’s content. Googlebot can execute Javascript. However, Google recommends server-side or pre-rendering content, because it both makes your website faster and helps crawlers in the crawling process.

- Googlebot then repeats this same process using a different URL from the queue.

Notably, some of these tasks occur in parallel, instead of as independent steps in the crawling and indexing process.

Googlebot and the Robots.txt File

The robots.txt file is a plain text file following the Robots Exclusion Protocol (REP) and offering instructions for web crawlers like Googlebot. The purpose of the file and REP is to communicate with web crawlers: exclusions (partial or complete), links to sitemaps, crawling rates, and other custom instructions that some crawlers may use.

“Googlebot and all respectable search engine bots will respect the directives in robots.txt.” Google: Advanced SEO

In an effort to make the REP an internet standard, Google released the source code used by their team to parse robots.txt files.

Important Notes on Robots.txt:

- The robots.txt file size limit is 500 KiB.

- Unsupported and unpublished rules (such as noindex) are ignored.

- If the robots.txt file becomes inaccessible due to a server error, it will be interpreted as fully disallowed. If the error persists after 30 days, Google tries to use the last cached copy of the robots.txt file. If this cached copy is unavailable, Googlebot will assume there are no crawl restrictions.

- You can’t selectively target either Googlebot Smartphone or Googlebot Desktop using robots.txt, since both crawlers obey the same product token (Googlebot) in robots.txt

- A lack of a robots.txt file is not a bad thing. It just means that any crawler will have full access to your site, also known as crawling without restrictions.

- Google will cache the content of your robots.txt file for up to 24 hours. The cache lifetime can be either increased or decreased based on max-age Cache-Control HTTP headers.

- If you need to suspend crawling temporarily for some reason, Google recommends returning a 503 HTTP status code for every URL on the site. However, be careful with this process. Serving HTTP error status codes for an extended period (more than two days) can result in your URLs being dropped from the Google index.

- The robots.txt rules only apply to the protocol, host, and port number where you host the robots.txt file. For example, the rules on https://onward.justia.com/robots.txt will only apply to all URLs with the following structure:

- protocol: HTTPS,

- host: onward.justia.com, and

- port number: 443.

Keep this in mind if your website doesn’t redirect HTTP to HTTPS.

Related Article: Internet Archive Will Ignore Robots.txt files to Keep Historical Record Accurate

Blocking Googlebot From Visiting Your Site

There are several valid reasons for someone to block Googlebot from visiting a website or a specific web page. If you are interested in learning more about blocking Googlebot from content and the reasons someone may want to block a web page from crawlers, check out our previous article: How to Hide Content from Search Engines, and Why You May Want To.

Crawl Rate

Crawl rate refers to the number of requests per second that Googlebot will make on a given website. It needs to moderate demand in order to not bring your website down or consume your monthly bandwidth.

Crawl Budget

Crawl budget is a term used in the SEO field to describe the number of web pages Google could crawl during a specific time period. Google determines the crawl budget considering the crawl rate and the crawl demand.

Not all websites are the same, so this number is different for every website and is impacted by various factors. The main objective is to avoid problems during the crawling event.

Even though most websites need not worry about the crawl budget, following a few best practices can help you avoid wasting crawling attempts out of your crawl budget. Some things you can do include:

- Avoiding duplicate content within your website.

- Fixing broken links.

- Creating high-value web pages. In other words, avoid creating low-value web pages.

The goal here is to ensure that all links crawled and indexed by Google contain relevant, unique content that both works with your SEO strategy and is targeted for your intended audience.

Crawl Rate Limit

Googlebot and other search engine web crawlers follow certain best practices to avoid negatively impacting the website they’re crawling. The crawl rate limit is the maximum fetching rate for a given site, and this calculation is based on two factors:

- Crawl health, which is the measurement of how quickly your server responds to each petition. If the response is steady, the limit goes up. Otherwise, it goes down and Googlebot will crawl your site less often.

- The crawl rate limit for your property is set in Google Search Console. This setting is reset to automatic after 90 days.

Crawl Demand

Crawl demand is determined by the popularity of your website and the staleness of your content.

Important Notes:

- While you cannot choose how often Google will crawl your website, you can request a recrawl. If you request a recrawl, it can take anywhere from a few days to a few weeks. Repeatedly clicking the recrawl button will not speed up the process. Instead, you can monitor the progress using the Index Coverage report or the URL Inspection Tool.

- Google does not recommend limiting the crawl rate, but this setting is available to you if you are facing server problems caused by Googlebot.

- Requesting a new crawl doesn’t necessarily mean that Google will include the content in search results. If the content lacks value or is of low quality, it can be excluded.

Best Practices

- Verify that Googlebot can access and render the content of your website. You can do so by reviewing the rules on your robots.txt (top-level directory of a site), the robots meta value on the source code of your web pages, or in Google Search Console, which offers a robots testing tool to verify that Googlebot is able to crawl your website.

- Content, metadata, headings, and structured data should be equivalent on both desktop and mobile versions. Keep in mind that indexed content will primarily come from the mobile version.

- Avoid lazy loading the primary content upon user interaction. Googlebot will not trigger any user interaction, so the content will not load and, consequentially, Googlebot will not index or crawl the primary content. For more information, check out this resource.

- Try not to block resource directories from Googlebot using the disallow directive. Some of these resources may be needed to properly load your website. If these important resources get blocked from Googlebot, it will have trouble rendering your content.

- Monitor the server response codes from your robots.txt file and website. Serving the proper status codes will help Googlebot crawl your site without problems.

Additional FAQs About Googlebot

Q. Absolute or relative URLs, which is better?

A. The short answer: it doesn’t matter. Both types of links are treated the same way by Googlebot, as long as the URLs are properly set and valid.

Q. Can I prevent link discovery on my web pages?

A. Yes, just use the nofollow mechanism and Googlebot will respect the instruction.

Q. How long does it take Google to show my website in the search results?

A. In classic legal fashion, the answer is it depends. It can take anywhere from a few days to a few weeks for Google to show content on its SERPs. Remember, Google doesn’t guarantee that all pages on your site will be indexed or even crawled. Whether your site is included depends on the quality and relevance of the content, among other factors.

Q. Does Google have an index limit?

A. The short answer is no. However, while there is no limit, Google does try to focus its resources on pages that make sense to be indexed.

Q. Are URLS case sensitive?

A. Yes, upper or lowercase does matter for URLs. It is a good practice to be consistent and avoid duplicate content by serving the same content on multiple URLs.

Resources

- Formalizing the Robots Exclusion Protocol Specification

- Googlebot

- Crawlers List: Googlebot

- How Google Interprets the Robots.txt Specification

- Create a Robots.txt File

- Spidering Hacks: 100 Industrial-Strength Tips & Tools

Final Thoughts: Why Do You Care?

Consider Googlebot your ally in executing your SEO strategy. If you want your content to be crawled and listed on Google, you must ensure you do not restrict access to your site and regularly check your Google Search Console for insights about the index status of your website. In Google Search Console, you can also check for issues that may be affecting the indexability of your site.

View this clip to learn more about using Google Search Console to check for indexability issues on your site.