We can’t send you updates from Justia Onward without your email.

Unsubscribe at any time.

In today’s day and age, people commonly turn to the internet, seeking answers to their questions through various search engines. Understanding the process by which these search engines crawl, index, and rank content is both interesting and essential to improving your website’s search performance.

Nowadays, one of the most common online activities for most people is seeking answers on a search engine, such as Google, Bing, Yahoo, etc. You type a query and get back results. Often, you’ll find what you were looking for on the first page of the results.

Understanding how search engines crawl, index, and rank websites is not only very interesting, but it is also essential when it comes to knowing how to better refine your website, your content quality, and your site structure to ultimately improve your ranking performance as you strive to achieve a first-page listing on the Search Engine Results Page (SERP).

Search Engine Websites

You can search for information across the web by using search engines. Attempting to locate information through search engines is perhaps the most common action on the entire World Wide Web.

According to GlobalStats, these are the top Search Engines of 2021 (and we all know which is the #1):

The fact that Google dominates the search engine industry made the word “Google” become a verb. Today, Google processes an average of 40,000 search queries per second. Because Google is the most dominant search engine in the world, this article focuses on the mechanics of crawling, indexing, and ranking in Google search results. However, other search engines’ processes of crawling, indexing, and ranking may work similarly.

Crawling

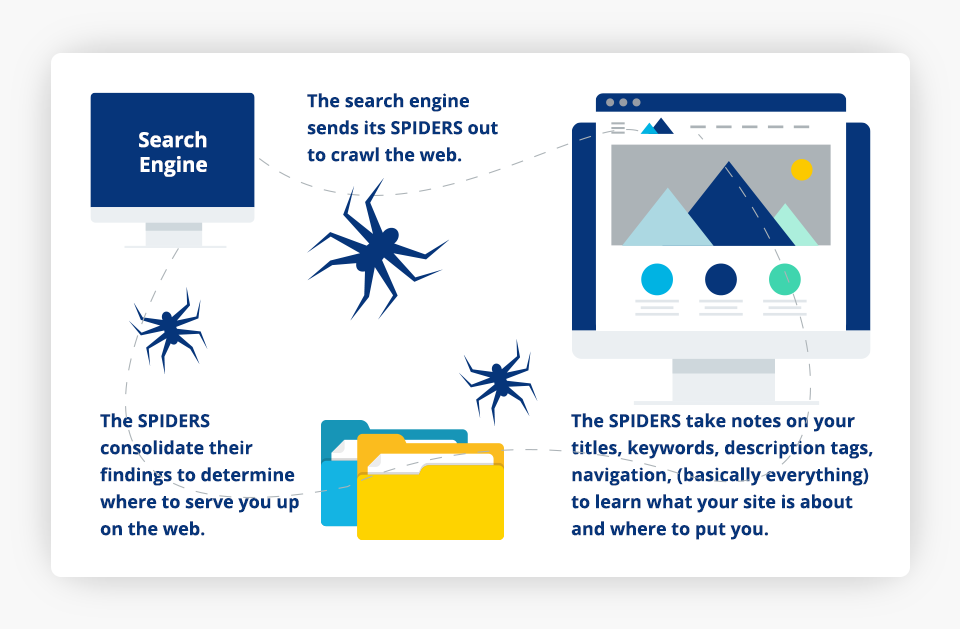

The word “crawler” comes from software doing the action of navigating the World Wide Web (WWW). This is also why these programs are referred to as spiders or spiderbots. This automated software is designed to navigate the “Web” and collect information from the websites they visit so that data can be indexed.

Websites are not just visited by regular users on web browsers, but also all kinds of web crawlers. Getting your website crawled by these spider bots is a good thing. It means your site is being discovered and is accessible by the corresponding search engine.

You can identify these web crawlers by the User-Agent header value:

| Search Engine | Web Crawler User-Agent |

|---|---|

| Googlebot | |

| Bing | Bingbot |

| Yahoo | Slurp Bot |

| Baidu | Baiduspider |

| Yandex | YandexBot |

| DuckDuckGo | DuckDuckBot |

Robots.txt

Web crawlers follow the directions listed on your robots.txt file. This file is not required, but (when necessary) is placed on the root directory of your website. You only need this file when you need to block access to some of your paths, list sitemaps, or disallow specific crawlers, but it remains up to them to respect the directives.

I want to hide content from getting indexed, can I do that?

Definitely! You can block web crawlers from indexing your content using the robots meta tag. You need to set the value to “noindex, nofollow,” but should be careful with this instruction. Only use this if necessary.

Sitemaps

A sitemap is a file that helps search engines crawl your website more efficiently. Web crawlers are smart enough to retrieve all the existing links in your website, but not having a sitemap may cause web crawlers to overlook indexing content from your website.

Important Things About Web Crawlers

- These Bots will constantly visit your website to check for new or updated content.

- Crawl Budget: This is a mix of the “Crawl demand”, and the “Crawl capacity”.

- Crawl demand: how many URLs Google wants to crawl from your website.

- Crawl capacity: how many URLs Google thinks the server can handle.

Indexing

As web crawlers search the Internet to discover new or updated pages, they add the pages into a list that Google will then use at the indexing stage. Google will visit each page discovered during crawling to analyze and understand its content. Having a solid technical SEO foundation is crucial, as it will make it easier for search engines to access and understand the content on your site.

As web crawlers search the Internet to discover new or updated pages, they add the pages into a list that Google will then use at the indexing stage. Google will visit each page discovered during crawling to analyze and understand its content. Having a solid technical SEO foundation is crucial, as it will make it easier for search engines to access and understand the content on your site.

In layman’s terms, at this stage, search engines extract the headings and other texts out of each URL, then store and organize the information on their servers. Even when your site’s content has been indexed, search engines will repeat this process of crawling and indexing your content over and over, so their index remains up to date.

Back to the spider’s job: as it finds new data on a site, the information is analyzed, organized, and added to Google’s searchable index. Keep in mind that users won’t necessarily find all indexed content. Thus, arises the need to add high-quality, original, and relevant content that satisfies your website visitors’ queries.

“The most important is organizing: there are hundreds of billions of web pages that are out there. Our job is to filter through that and to really give you what you are looking for at that moment in time.”

Cathy Edwards

Vice President of Engineering at Google

Important Things About Indexing

- Google doesn’t have any limits for indexing.

- Google could penalize sites that don’t follow their quality guidelines.

- JSON-LD has become the number 1 structured data format.

- The proper use of structured data and correct markup helps Google understand better the context of your website.

- There’s no guarantee that Google will index any particular page.

Ranking

In addition to getting your website indexed, having a robust technical SEO foundation and incorporating relevant content that is valuable for your target audience are integral in making the difference between your website appearing on the first pages of the search results or being lost on page three and beyond.

Related Article: Website Metrics With Google Lighthouse

Many websites may offer similar content, services, or products, so you need to differentiate your site by providing relevant and unique content while offering a rich user experience for your visitors.

The Ranking Factors

Search engines, particularly Google, have created ranking systems made up of several algorithms which take into consideration numerous factors, such as:

- Page Speed

- Core Web Vitals

- Schema markup

- Mobile friendliness

- Security (whether your site XSS free and served under the HTTPS protocol)

- Relevant and unique content

- Freshness

- Authoritative domain

- Backlinks from authoritative sources.

- User location

- User settings

“Ranking is important because if we simply return the million pages that match your search query, that’s not particularly helpful. And so we need to rank the pages that you might find useful. Hopefully, these are at the top of your results.”

Pandu Nayak

Member Technical Staff at Google

Watch this clip to learn more about Google Organic search rankings.

Important Things About Ranking

- Google won’t rank you higher if you advertise on Google.

- Google doesn’t accept payments to rank your website higher.

- Google’s index is in constant change, and so are rankings.

- SEO is crucial for a websites’ rankings and visibility.

- Results are affected by the user’s location and settings.

Additional Resources

- Trillions of Questions, No Easy Answers: A (Home) Movie About How Google Search Works

- Introduction to Robots.txt

- Discover How Google Search Works

- How Search Algorithms Work

- Google Quality Raters

- Web Vitals: Essential Metrics for a Healthy Site

Final Thoughts: Why Do You Care?

One of the main goals of your website is to get visitors, and one of the most important ways to drive traffic to your site is getting your content listed in the search engine results. After reading this article, you are one step closer to achieving your goals because you have learned more about how search engines crawl, index, and rank websites.